Use Harness IDP for self serviced Harness CI/CD onboarding

In this tutorial we will create a self service onboarding flow to create a new service using cookiecutter template and add it Harness IDP software catalog as a software component using the catalog-info.yaml followed by provisioning a Deployment Pipeline for the newly created service, using the Harness Terraform Provider.

Pre-Requisite:

- Make sure you are assigned the IDP Admin Role or another role that has full access to all IDP resources.

- Create a GitHub connector named

democonnectorat the account scope. This connector should be configured for a GitHub organization (personal accounts are currently not supported by this tutorial). - Delegate with Terraform Installed on it

Create a Pipeline

Begin by creating a pipeline for onboarding the service.

To create a Developer Portal stage, perform the following steps:

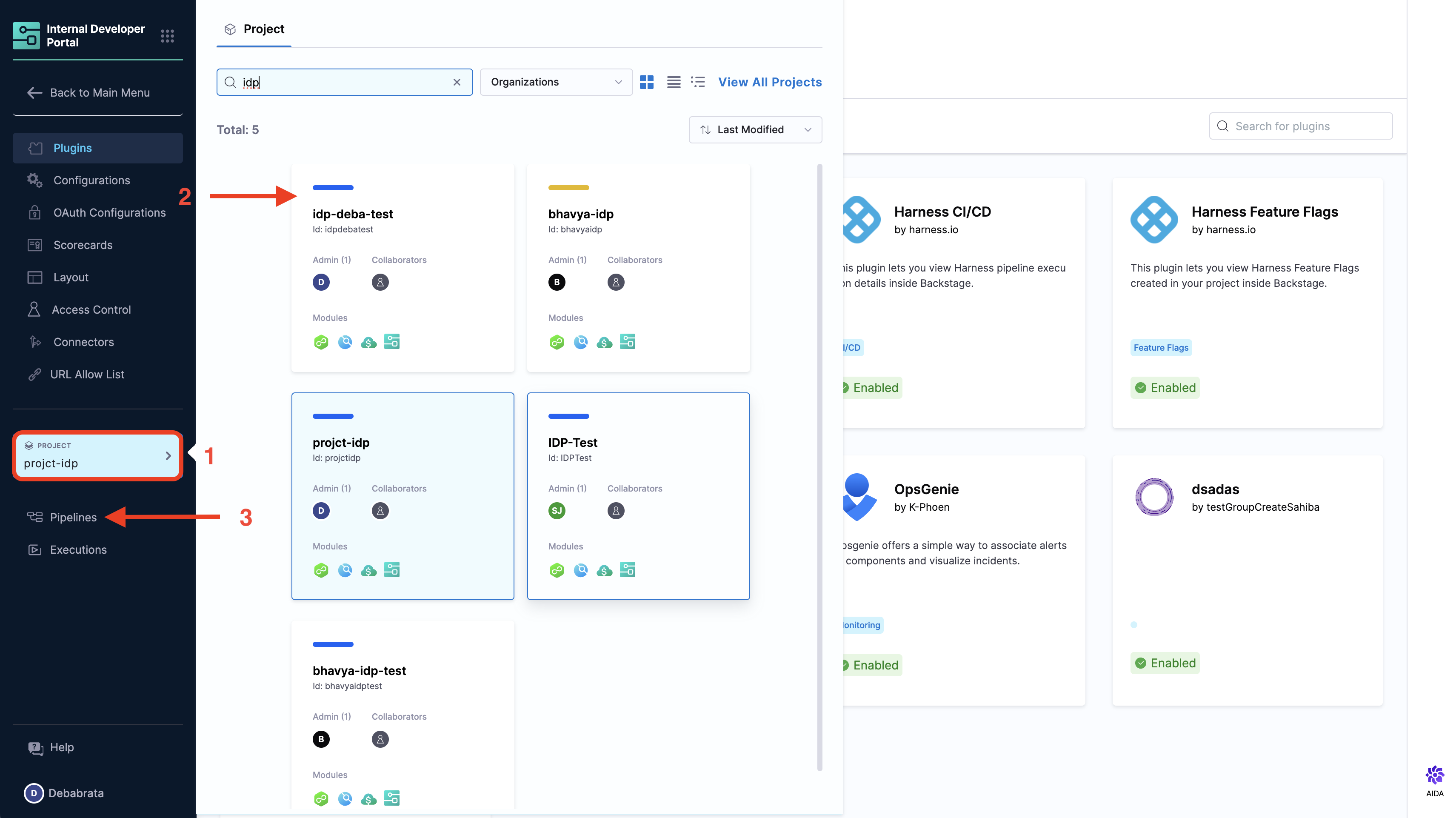

- Go to Admin section under IDP, select Projects, and then select a project.

You can also create a new project for the service onboarding pipelines. Eventually, all the users in your account should have permissions to execute the pipelines in this project. For information about creating a project, go to Create organizations and projects.

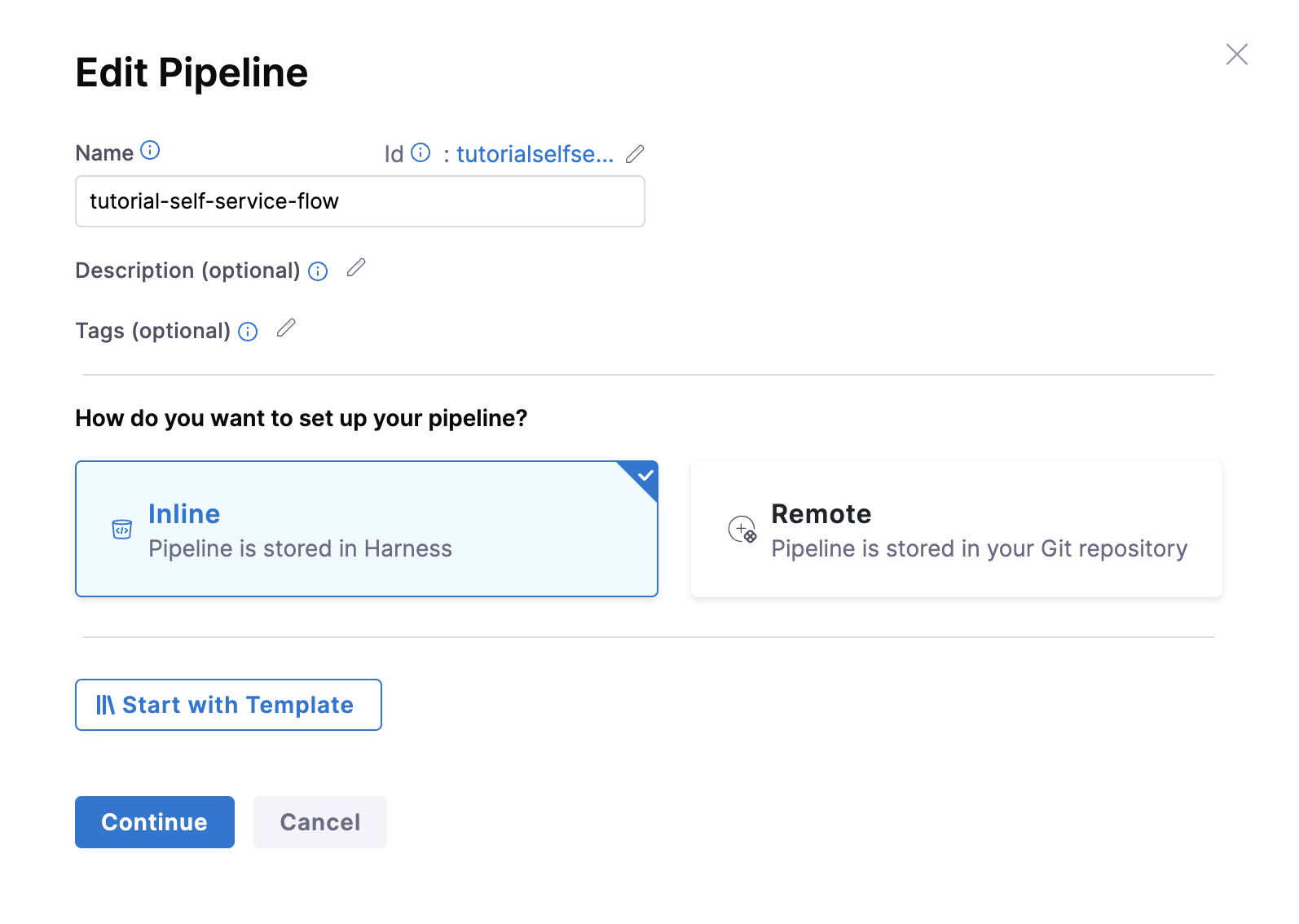

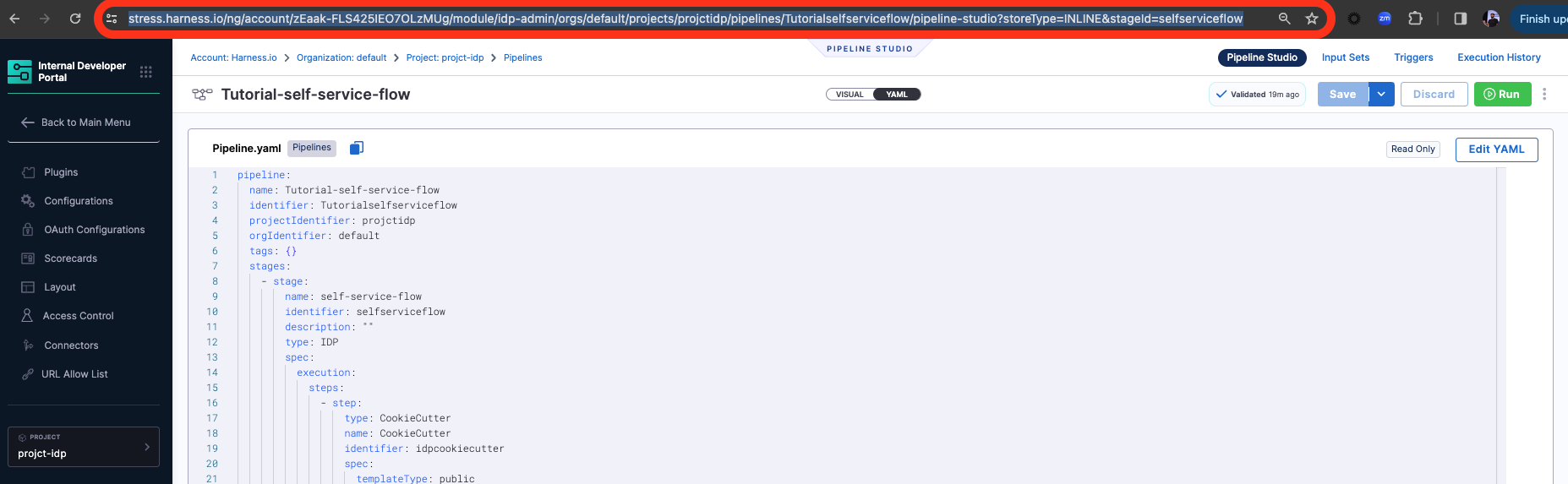

- Then select Create a Pipeline, add a name for the pipeline and select the type as Inline

- The YAML below defines an IDP Stage with a number of steps (as described here) that will perform the actions to onboard the new service. Copy the YAML below, then in the Harness Pipeline Studio go to the YAML view and paste below the existing YAML.

You need to have completed all the prerequisites for the below given YAML to work properly

Please update the connectorRef: <the_connector_name_you_created_under_prerequisites> for all the steps it's used, also here we are assuming the git provider to be GitHub please update the connectorType for CreateRepo, DirectPush and RegisterCatalog step in case it's other than GitHub. Also under the slack notify step for token add the token identifier, you have created above as part of prerequisites.

stages:

- stage:

name: self-service-flow

identifier: selfserviceflow

description: ""

type: IDP

spec:

execution:

steps:

- step:

type: CookieCutter

name: CookieCutter

identifier: idpcookiecutter

spec:

templateType: public

publicTemplateUrl: <+pipeline.variables.public_cookiecutter_template_url>

cookieCutterVariables:

app_name: <+pipeline.variables.project_name>

- step:

type: CreateRepo

name: Create Repo

identifier: createrepo

spec:

connectorType: Github

connectorRef: account.democonnector

organization: <+pipeline.variables.organization>

repository: <+pipeline.variables.project_name>

repoType: public

description: <+pipeline.variables.repository_description>

defaultBranch: <+pipeline.variables.repository_default_branch>

- step:

type: CreateCatalog

name: Create IDP Component

identifier: createcatalog

spec:

fileName: <+pipeline.variables.catalog_file_name>

filePath: <+pipeline.variables.project_name>

fileContent: |-

apiVersion: backstage.io/v1alpha1

kind: Component

metadata:

name: <+pipeline.variables.project_name>

description: <+pipeline.variables.project_name> created using self service flow

annotations:

backstage.io/techdocs-ref: dir:.

spec:

type: service

owner: test

lifecycle: experimental

- step:

type: DirectPush

name: Push Code into Repo

identifier: directpush

spec:

connectorType: Github

connectorRef: account.democonnector

organization: <+pipeline.variables.organization>

repository: <+pipeline.variables.project_name>

codeDirectory: <+pipeline.variables.project_name>

branch: <+pipeline.variables.direct_push_branch>

- step:

type: RegisterCatalog

name: Register Component in IDP

identifier: registercatalog

spec:

connectorType: Github

connectorRef: account.democonnector

organization: <+pipeline.variables.organization>

repository: <+pipeline.variables.project_name>

filePath: <+pipeline.variables.catalog_file_name>

branch: <+pipeline.variables.direct_push_branch>

cloneCodebase: false

caching:

enabled: false

paths: []

platform:

os: Linux

arch: Amd64

runtime:

type: Cloud

spec: {}

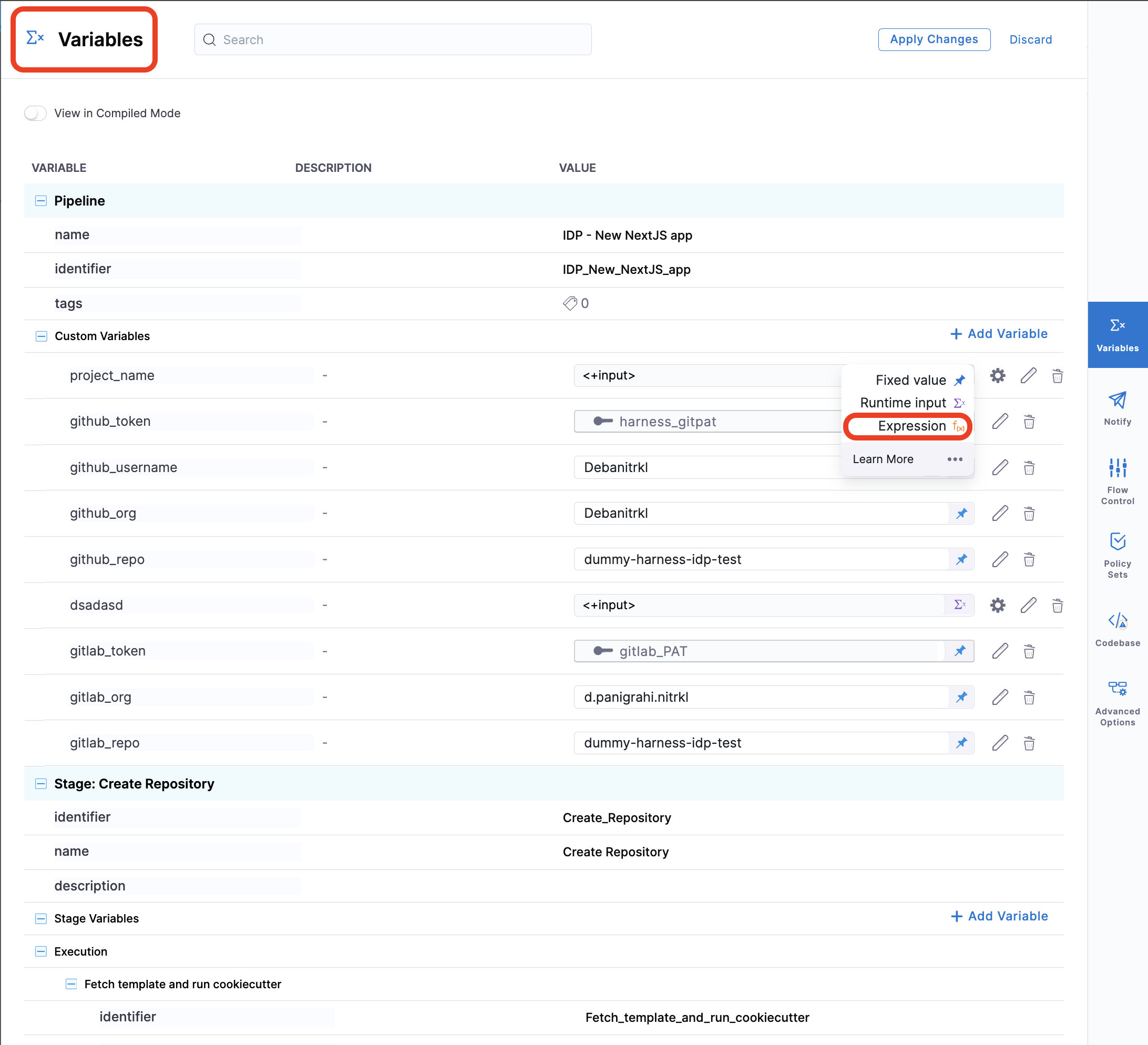

variables:

- name: test_content

type: String

description: ""

required: false

value: devesh

- name: project_name

type: String

description: ""

required: false

value: <+input>

- name: organization

type: String

description: ""

required: false

value: <+input>

- name: public_cookiecutter_template_url

type: String

description: ""

required: false

value: <+input>

- name: repository_type

type: String

description: ""

required: false

value: <+input>.default(private).allowedValues(private,public)

- name: repository_description

type: String

description: ""

required: false

value: <+input>

- name: repository_default_branch

type: String

description: ""

required: false

value: <+input>

- name: direct_push_branch

type: String

description: ""

required: false

value: <+input>

- name: catalog_file_name

type: String

description: ""

required: false

value: catalog-info.yaml

Add the Custom Stage to Provision the Deployment Pipeline.

Pre-requisites

- Fork this repo or download the folder and add it to your own git provider, to be used as Configuration File Repository. Also on

harness_resources.tffile update theorg idand inharness.tfupdate theaccount_id.

Now, if you also use Harness CI/CD, you can follow the steps below to create a Custom Stage with Terraform Apply step to provision the Harness deployment pipeline.

-

Add a Custom Stage, after the Developer Portal Stage.

-

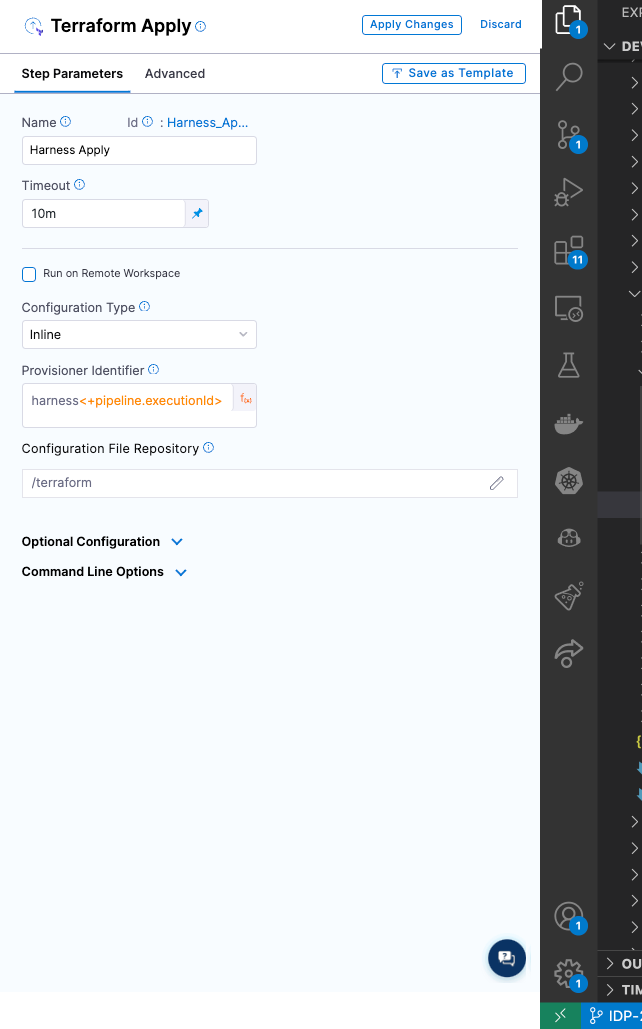

Add the Terraform Apply step, that could provision Harness Pipeline for the newly created service.

While you add the Terraform Apply step make sure to add the Provisioner Identifier as an expression as harness<+pipeline.executionId>

You could find the config files to provision a pipeline. Now create a connector to add this config files.

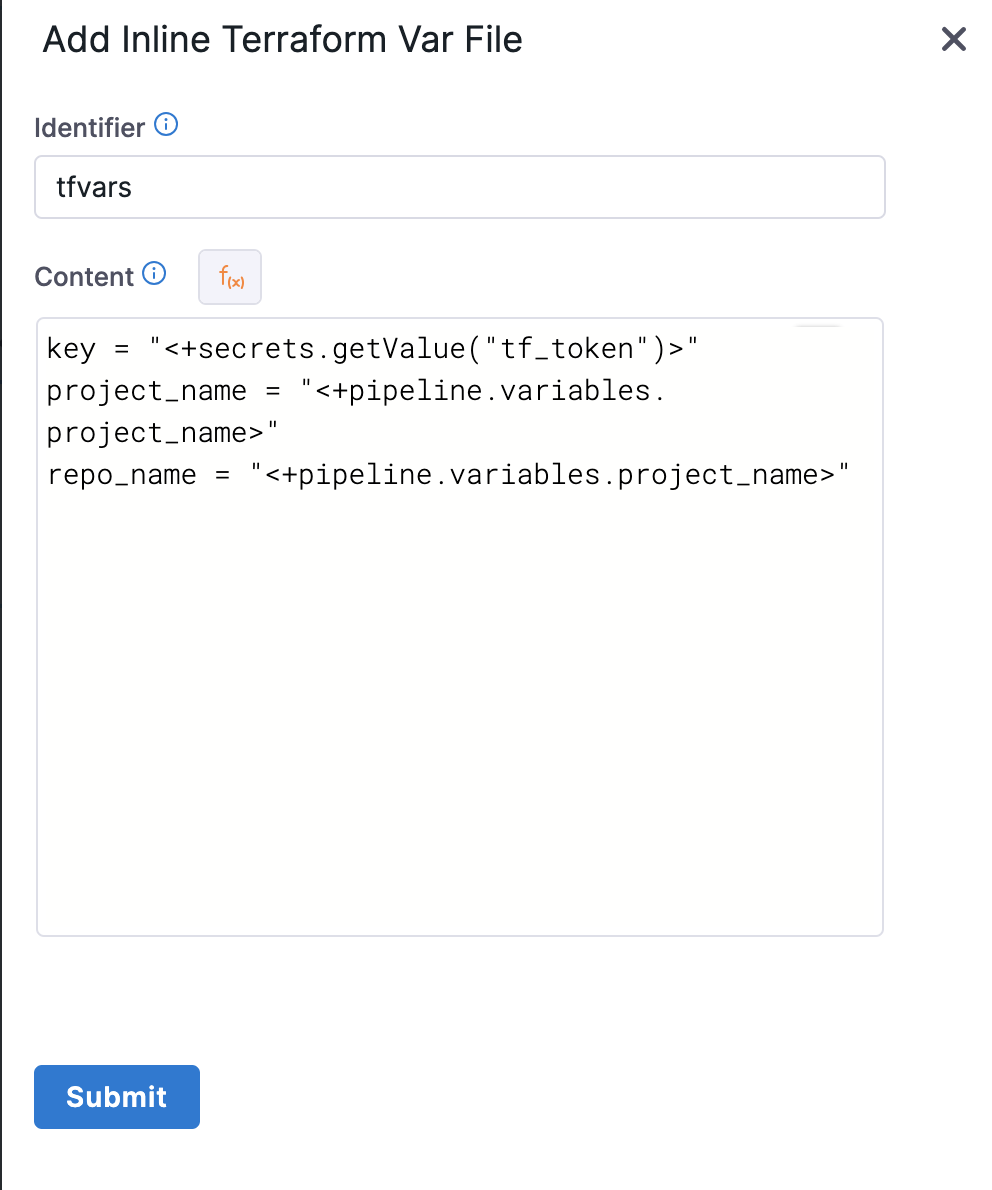

We also have a Terraform Var Files, as mentioned in the below example YAML.

# Example

- stage:

name: Build out Harness

identifier: Build_out_Harness

description: ""

type: Custom

spec:

execution:

steps:

- step:

type: TerraformApply

name: Harness Apply

identifier: Harness_Apply

spec:

provisionerIdentifier: harness<+pipeline.executionId>

configuration:

type: Inline

spec:

configFiles:

store:

spec:

connectorRef: Github_IDP_Organization

repoName: idp-terraform

gitFetchType: Branch

branch: master

folderPath: terraform

type: Github

varFiles:

- varFile:

spec:

content: |-

key = "<+secrets.getValue("tf_token")>"

project_name = "<+pipeline.variables.project_name>"

repo_name = "<+pipeline.variables.project_name>"

identifier: tfvars

type: Inline

timeout: 10m

- Now save the Pipeline.

Workflows currently support pipelines that are composed only of IDP Stagecustom stage and CI stage with Run step with codebase disabled. Additionally, all inputs, except for pipeline input as variables, must be of fixed value.

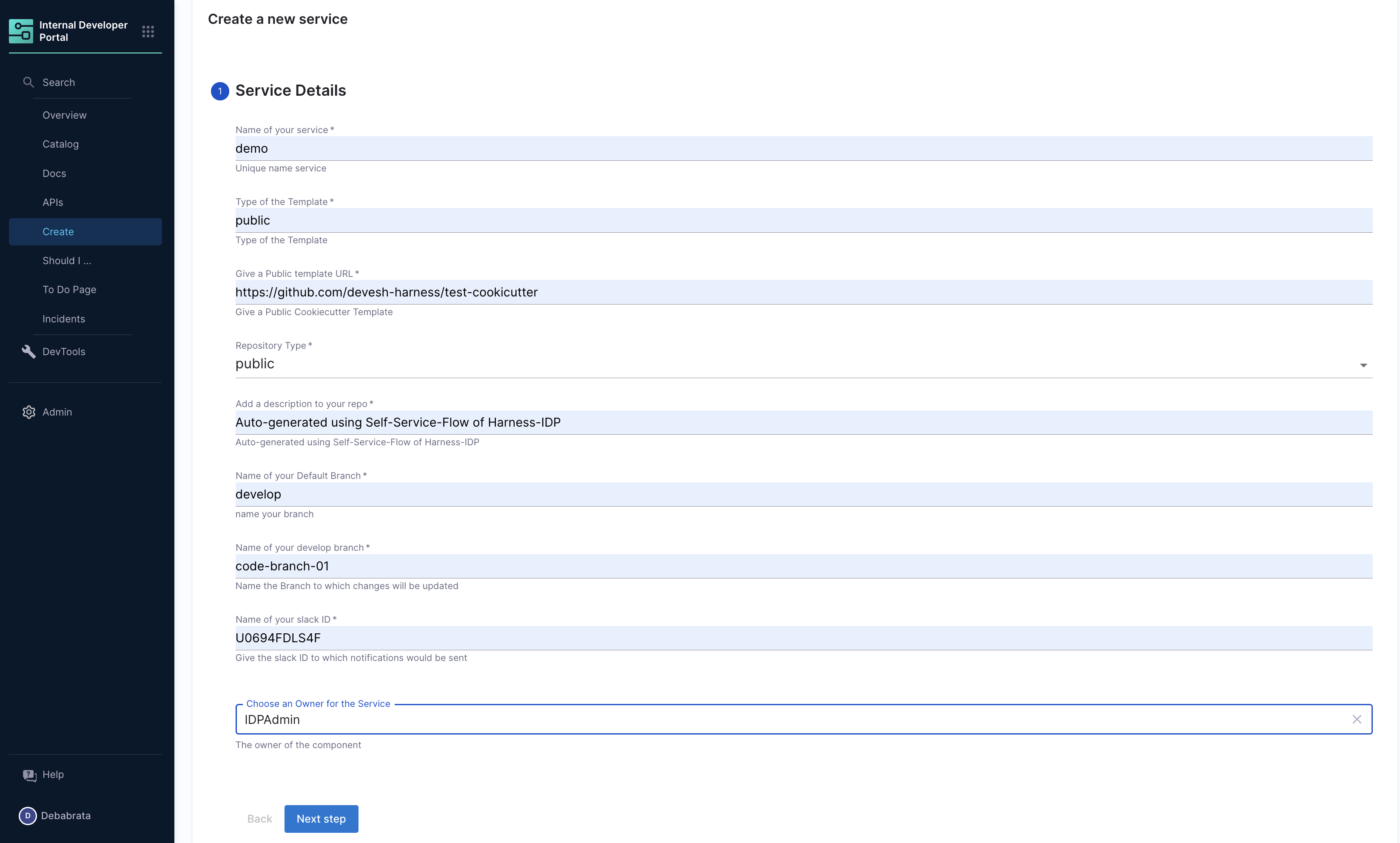

Create a Workflow

Now that our pipeline is ready to execute when a project name and a GitHub repository name are provided, let's create the UI counterpart of it in IDP. This is powered by the Backstage Software Template. Create a workflow.yaml file anywhere in your Git repository. Usually, that would be the same place as your cookiecutter template. We use the react-jsonschema-form playground to build the template. Nunjucks is templating engine for the IDP templates.

Adding the owner

By default the owner is of type Group which is same as the User Group in Harness. In case the owner is a user you have to mention it as user:default/debabrata.panigrahi and it should only contain the username not the complete email ID.

apiVersion: scaffolder.backstage.io/v1beta3

kind: Template

metadata:

name: new-service

title: Create a new service

description: A template to create a new service

tags:

- nextjs

- react

- javascript

spec:

owner: owner@company.com

type: service

parameters:

- title: Service Details

required:

- project_name

- organization_name

- public_template_url

- repository_type

- repository_description

- repository_default_branch

- direct_push_branch

properties:

public_template_url:

title: Public Cookiecutter Template URL

type: string

default: https://github.com/devesh-harness/test-cookicutter

description: URL to a Cookiecutter template. For the tutorial you can use the default input

organization_name:

title: Git Organization

type: string

description: Name of your organization in Git

project_name:

title: Name of your service

type: string

description: Your repo will be created with this name

repository_type:

type: string

title: Repository Type

enum:

- public

- private

default: public

repository_description:

type: string

title: Add a description to your repo

repository_default_branch:

title: Name of your Default Branch

type: string

default: main

direct_push_branch:

title: Name of your Develop branch

type: string

default: develop

owner:

title: Choose an Owner for the Service

type: string

ui:field: OwnerPicker

ui:options:

allowedKinds:

- Group

# This field is hidden but needed to authenticate the request to trigger the pipeline

token:

title: Harness Token

type: string

ui:widget: password

ui:field: HarnessAuthToken

steps:

- id: trigger

name: Bootstrapping your new service

action: trigger:harness-custom-pipeline

input:

url: "YOUR PIPELINE URL HERE"

inputset:

organization: ${{ parameters.organization_name }}

project_name: ${{ parameters.project_name }}

public_cookiecutter_template_url: ${{ parameters.public_template_url }}

repository_type: ${{ parameters.repository_type }}

repository_description: ${{ parameters.repository_description }}

repository_default_branch: ${{ parameters.repository_default_branch }}

direct_push_branch: ${{ parameters.direct_push_branch }}

apikey: ${{ parameters.token }}

output:

links:

- title: Pipeline Details

url: ${{ steps.trigger.output.PipelineUrl }}

Replace the YOUR PIPELINE URL HERE with the pipeline URL that you created.

This YAML code is governed by Backstage. You can change the name and description of the Workflow. The template has the following parts:

- Input from the user

- Execution of pipeline

Let's take a look at the inputs that the template expects from a developer. The inputs are written in the spec.parameters field. It has two parts, but you can combine them. The keys in properties are the unique IDs of fields (for example, github_repo and project_name). If you recall, they are the pipeline variables that we set as runtime inputs earlier. This is what we want the developer to enter when creating their new application.

The YAML definition includes fields such as cloud provider and database choice. They are for demonstration purposes only and are not used in this tutorial.

Authenticating the Request to the Pipeline

The Workflow contains a single action which is designed to trigger the pipeline you created via an API call. Since the API call requires authentication, Harness has created a custom component to authenticate based of the logged-in user's credentials.

The following YAML snippet under spec.parameters.properties automatically creates a token field without exposing it to the end user.

token:

title: Harness Token

type: string

ui:widget: password

ui:field: HarnessAuthToken

The token property we use to fetch Harness Auth Token is hidden on the Review Step using ui:widget: password, but for this to work the token property needs to be mentioned under the first page in-case you have multiple pages.

# example workflow.yaml

...

parameters:

- title: <PAGE-1 TITLE>

properties:

property-1:

title: title-1

type: string

property-2:

title: title-2

token:

title: Harness Token

type: string

ui:widget: password

ui:field: HarnessAuthToken

- title: <PAGE-2 TITLE>

properties:

property-1:

title: title-1

type: string

property-2:

title: title-2

- title: <PAGE-n TITLE>

...

That token is then used as part of steps as apikey

steps:

- id: trigger

name: ...

action: trigger:harness-custom-pipeline

input:

url: ...

inputset:

key: value

...

apikey: ${{ parameters.token }}

Register the Workflow in IDP

Use the URL to the workflow.yaml created above and register it by using the same process for registering a new software component.

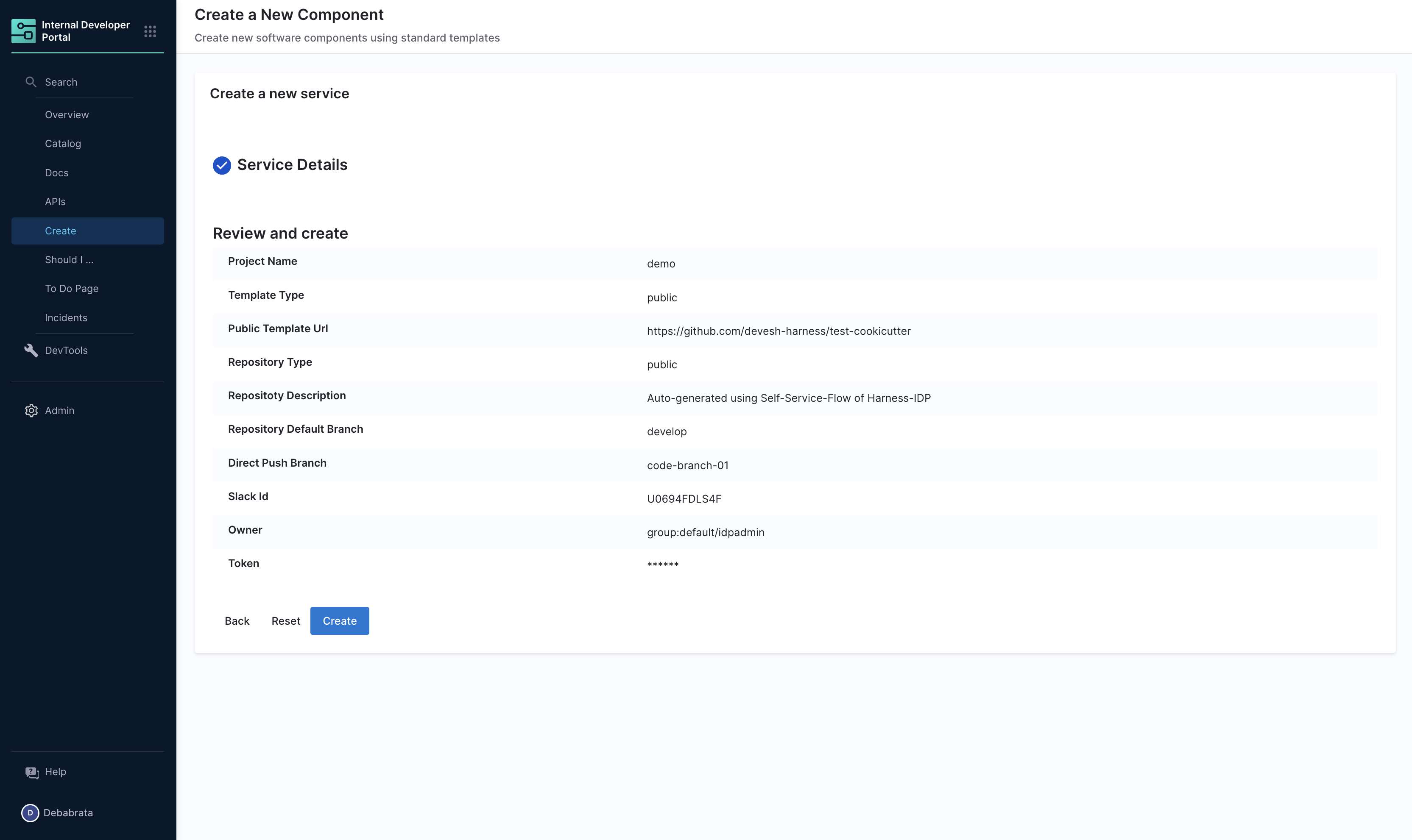

Use the Self Service Workflows

Now navigate to the Workflows page in IDP. You will see the newly created Workflow appear. Click on Choose, fill in the form, click Next Step, then Create to trigger the automated pipeline. Once complete, you should be able to see the new service created using the Cookiecutter template added to the Harness IDP software catalog as a software component, with its metadata defined in the catalog-info.yaml. Additionally, a Deployment Pipeline for the service will be provisioned and ready for execution, leveraging the capabilities of the Harness Terraform Provider.

Unregister/Delete Workflow

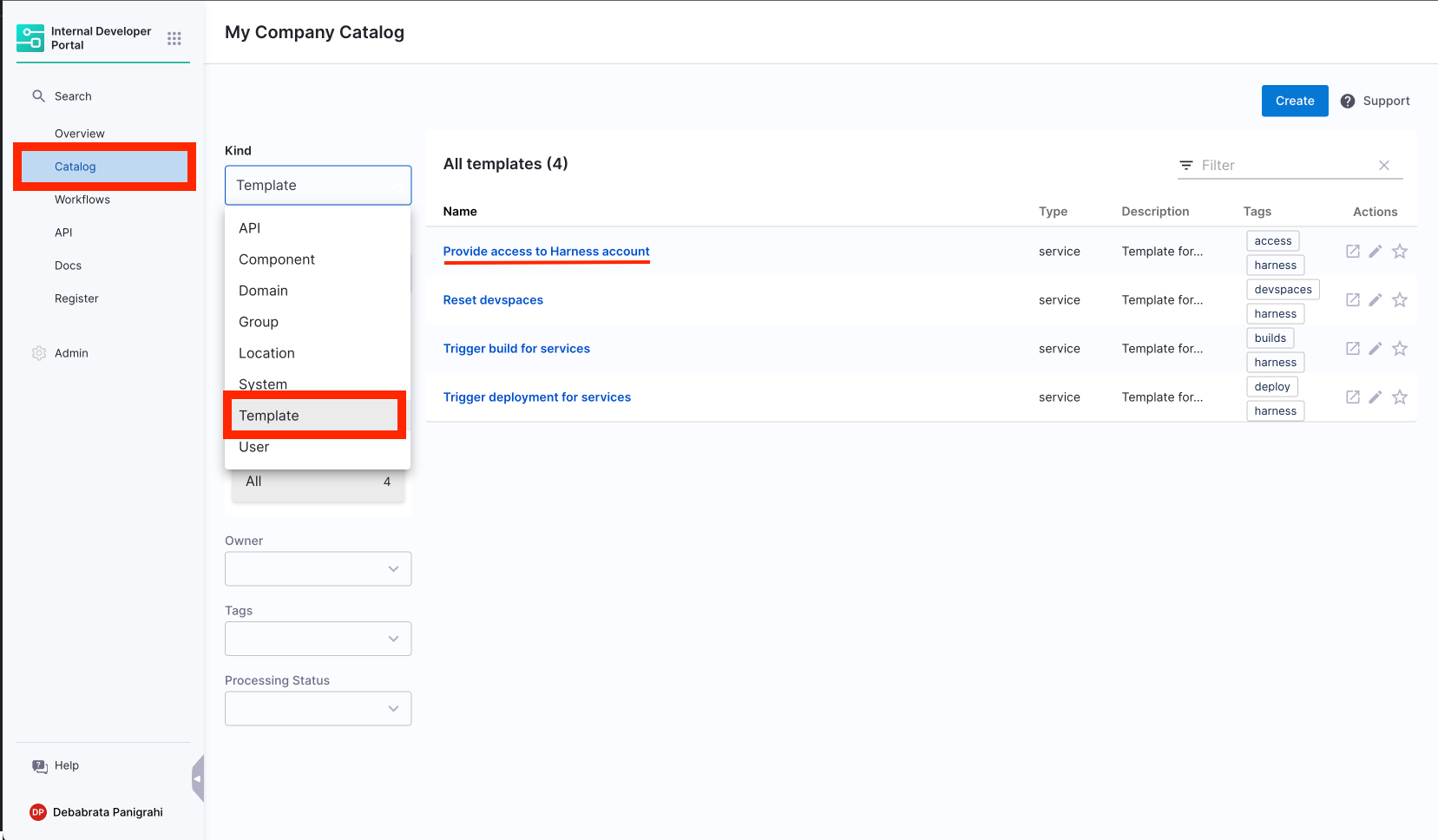

- Navigate to the Catalog page, and select Template under Kind.

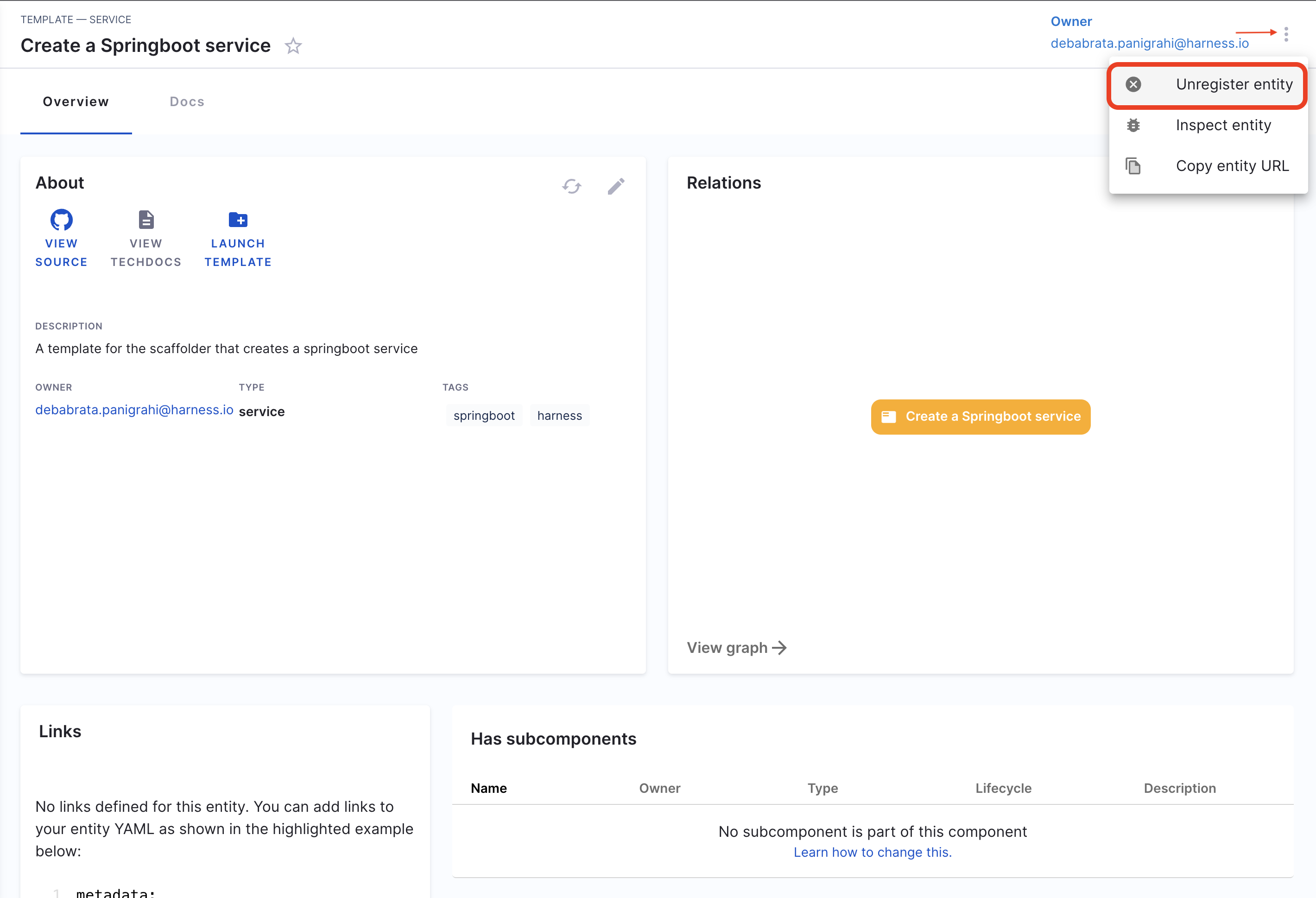

- Select the Workflow Name you want to Unregister.

- Now on the Workflow overview page, click on the 3 dots on top right corner and select Unregister Entity.

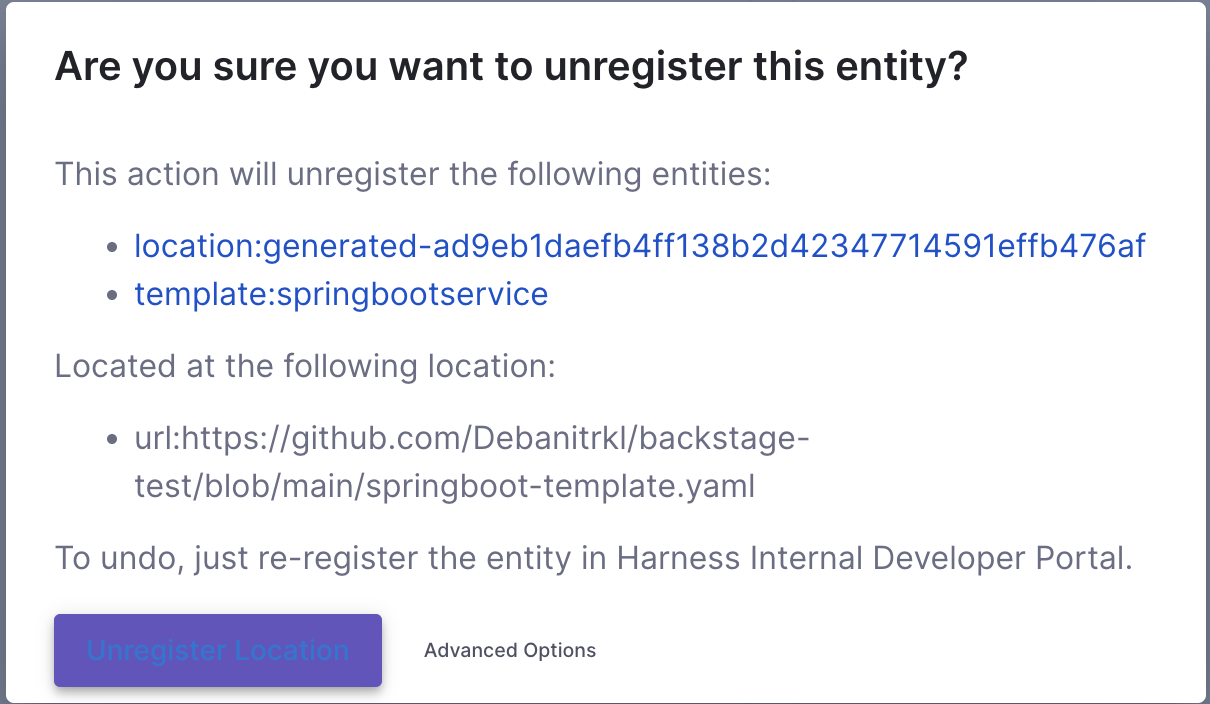

- Now on the Dialog box select Unregister Location.

- This will delete the Workflow.